It’s NOT About The Agents: What Most Generative AI Playbooks Get Wrong.

What Winning Enterprises Know About Enterprise Generative AI That You Don’t (Yet)

Earlier this year, I wrote about “Systems of Action.” It struck a chord.

This is the follow-up. This post takes what I’ve learned in the trenches over the past seven months working with close to 150 enterprise customers deploying generative AI in production and at scale (below is one of them, NatWest Group's Richard Healy).

Here is what everyone’s worried about:

Only 16% of enterprises have scaled Generative AI enterprise-wide.

Just 1 in 4 AI initiatives are delivering the expected ROI.

The good news is that I’ve met those who are succeeding. In this blog, I unpack the lessons I’ve learned from them. I share the the specifics—what worked, what didn’t, and my take on what might really be going on behind the scenes.

NatWest Group's CIO for Digital Transformation, Richard Healy and I presented at the Gartner Conference on the firm’s incredible results with Generative AI in production: 11M+ customer queries handled, 94% adoption and up to 150% boost in customer satisfaction.

If you’re new to this blog, welcome! I hope you’ll enjoy it enough to consider subscribing and sharing it.

I write about what I’ve learned as a technology executive over the last 25 years. I’ve helped build startups from inception and scale them. I’ve been acquired. I’ve acquired and invested in companies. I’ve worked at mid-size firms through IPO. I helped scale Data, AI and Analytics businesses at Microsoft and Google.

The below are my thoughts. No one’s paying me to write this—not my employer, not anyone.

Please feel free to comment, share your thoughts. I welcome smart debates. I write this to learn from smart people, regardless of whether they agree with me or not.

If this resonates with you, I hope you’ll consider subscribing. It’s free!

Stage One: Recognizing That The Path Isn’t Linear

If you’ve got kids, you’ve probably heard this one: “What’s the path to success?”

They imagine it’s a clean, upward line—predictable, repeatable, neatly mapped.

But we know better.

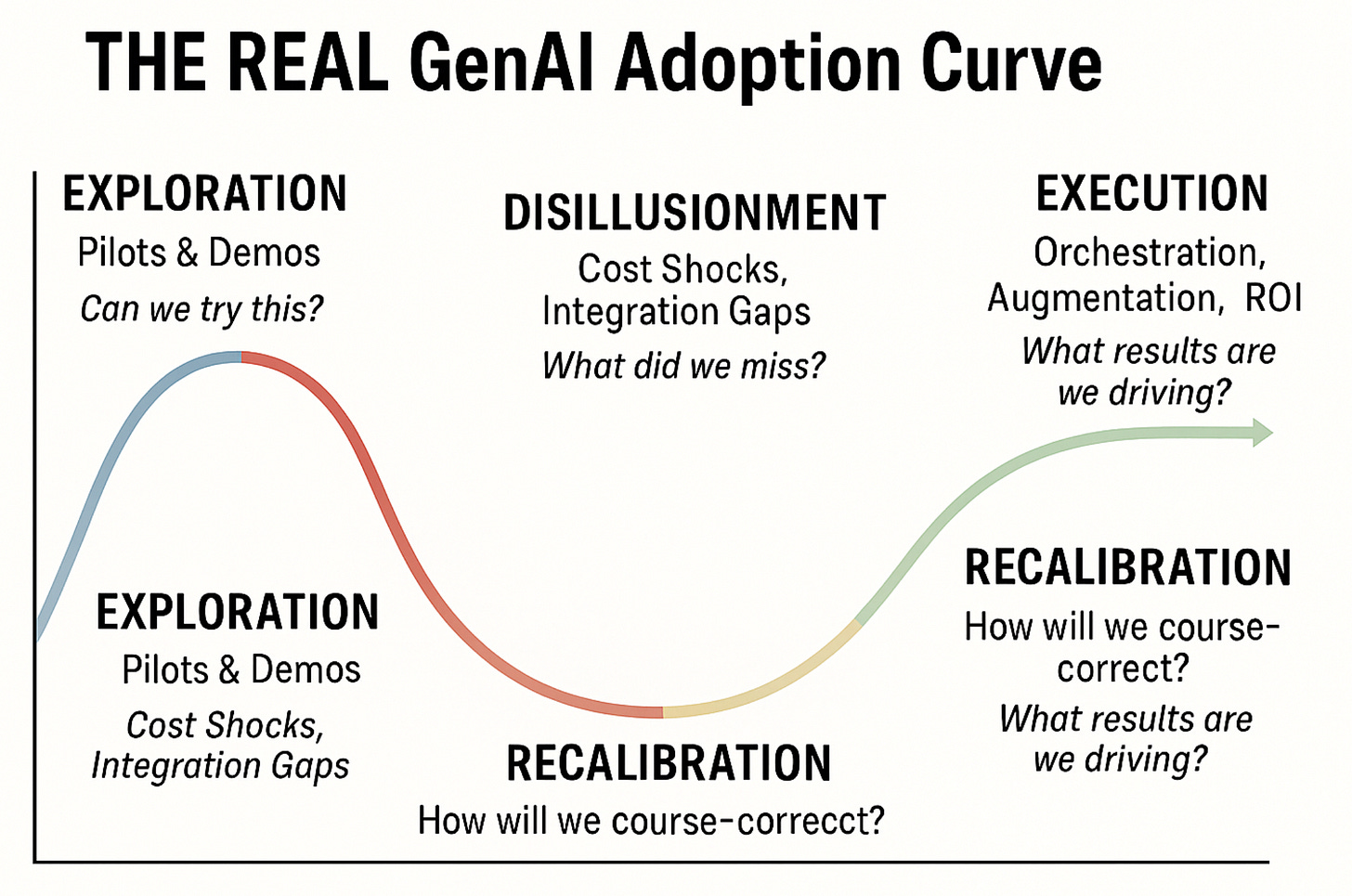

We know that the journey not a straightforward staircase—it’s more like the Gartner Hype Cycle.

In every Gen AI conversation I’ve had with enterprise leaders, one thing stands out:

Even the most well-funded, well-staffed companies discover that the journey is full of surprises, setbacks, and sideways moves.

The ones making real progress are the ones who’ve internalized this.

They’re not clinging to early hype or stuck in pilot purgatory.

They’re asking, “Where are we on the curve—and what do we need to learn next?

Many orgs have already passed the peak. Now comes the harder part. The part where you wonder how you’re going to reach the plateau of productivity…

The trough of disillusionment is a phase where initial excitement surrounding a new technology fades. Early implementations and experiments fail to deliver on promises. This can to a decline in interest and investment as people realize the technology's limitations and challenges

At this stage, the conversation shifts from the illusion of experimentations to the realities of full blow production. We ask:

What will it cost to operate at scale?

What are the performance trade-offs?

How do we secure, govern, and monitor these systems effectively?

This shift unveils new truths: Once GenAI systems move into production, the cost of inference could become the real operational burden—directly tied to application success. As NVIDIA CEO Jensen Huang noted, “Inference is going to be a hundred times larger than training.” In other words: most of the cost doesn’t come upfront—it comes after you launch.

Budgeting for GenAI isn’t just an exercise in forecasting—it’s a design challenge and one that could end projects poorly if we don’t design correctly.

According to Gartner, over 40% of agentic AI projects will be canceled by the end of 2027, due to escalating costs, unclear business value or inadequate risk controls (Source: Gartner, June 25, 2025).

This isn’t just a technical risk. It’s strategic. It’s financial.

The good news is that it’s avoidable. If you plan early and design with discipline.

Stage Two: From Vertical Silos to Horizontal Ecosystems

This is when executives realize that they face a fork in the road—one that’s less about architecture and more about strategic alignment.

There are three dominant options for GenAI deployment. Each one reveals how your organization thinks about scale, control, and complexity:

The Single-Cloud Bet:

It looks clean on paper—consolidate everything under one provider.

This approach ignores the fact that most enterprises are already multi-cloud, many hybrid, often by necessity.

A single-cloud strategy then, can create silos, reduces flexibility, and lock customers into a single vendor’s plan.

The All-in-One Application:

Solving one problem in one app sounds efficient.

But in practice, employees work across 10–15 applications daily.

These point solutions don’t integrate, don’t scale horizontally, and quickly become shadow IT nightmares.

The Horizontal Strategy:

This is the only one that meets the enterprise where it is.

It spans clouds, connects legacy with modern apps, and integrates directly into employee workflows.

What does this mean in practice? It means your vendor strategy is now your AI strategy.

The winning enterprises pick vendors by looking at incentive alignment. If a vendor's business model depends on you staying locked into their cloud or app, their incentives might be misaligned with your goals.

In other words: Strategy > Stack. I explain this in the short clip below (full video here).

Stage Three: Augment What Exists—Don’t Replace It.

aka the concept of “Agent Minus”.

There’s a growing assumption that GenAI agents will become the centerpiece of every enterprise workflow. That’s not what I’ve seeing in practice.

In most successful deployments, the value equation looks more like 70/30—with automation and integration doing the heavy lifting, and agents serving as intelligent layers on top.

A staggering 99% of enterprise data remains untouched by generative AI. Most of that untouched data lives in the workflows enterprises have spent decades refining:

Role-based approval chains in financial services

Maintenance diagnostics in manufacturing

Patient intake and billing systems in healthcare

Scheduling, routing, and inventory logic in logistics

These systems aren’t broken. In fact, they’re often your moat.

The opportunity isn’t to rip them out—it’s to extend them with GenAI.

At Heineken, success didn’t start with agents—it started with harmonizing 100,000+ data elements, digitizing their route to market, and simplifying operations. Only then could agents add strategic lift.

At USAA, agents don’t operate in isolation. They’re carefully tethered to trusted knowledge sources and process automations—so when an AI agent acts on a police report or an insurance form, the downstream journey is already defined and reliable.

The answer comes from marrying deterministic flows with agentic flows. Not rip and replace automations that have proven to return value for your enterprise. Here are additional examples that might resonate in your industry:

Airlines that blend new AI copilots with 30-year-old flight ops databases.

Banks using GenAI to review documents and cross-reference with existing compliance systems.

Healthcare orgs embedding LLMs inside patient journey orchestration—not on the outside.

AI strategy isn’t about chasing the “new stack”.

It’s about how you bring AI into what you’ve already built and that works.

Stage Four: The “Unlock” is Not in Building Agents. It’s in Orchestrating Them.

aka the concept of “Agent Plus”.

The industry conversation today is dominated by “agent building”.

But the real unlock in my observation will in fact come from Orchestrating them.

That’s because in the enterprise, it’s never just one agent. It’s:

Multiple agents working in tandem

Agents embedded across ecosystems—cloud, apps, custom stacks

Agents interacting with existing automation layers

Tools and agents cooperating under shared rules

That last one is a key soundbite:

It’s not just agents. It’s tools and rules—working with agents.

And orchestration isn’t just about making things run. It’s about facilitating complex task execution across multiple layers of intelligence and automation.

Enterprises that have succeeded master at least five key capabilities:

Multi-Agent Coordination and Collaboration

Agents need a protocol for communication, context sharing, and delegation. Without this, your agents become isolated islands—smart, but siloed.Cross-Ecosystem Integration

Agents will live in different environments. They must work across cloud platforms, business applications, and internally-built tools. A unified orchestration layer bridges these silos.Tool + Rule Management

Agents don’t operate alone as we described it in “Stage Three”. They interact with business tools governed by policies, access controls, and operational rules. Orchestration means managing these interactions with precision.Supervision, Routing, and Planning

Who takes action? When? What happens if something fails? Enterprises need intelligent routing, escalation paths, and oversight—sometimes even having one agent validate another’s work.AgentOps: Discovery, Monitoring, Optimization

As the agent layer grows, so does complexity. Enterprises will need full agent operations stacks: dashboards, observability, lifecycle management, performance tuning.

This is where the next wave of value will emerge—not from more agents, but from better orchestration.

Which brings me to PepsiCo. When Magesh Bagavathi, the company’s Global Head of Data, Analytics & AI, described their transformation, his reference to “Freedom within a framework” made me smile. It’s a principle I learned at Microsoft—and one I’ve carried with me since.

The idea is simple but powerful: Enable autonomy, but within guardrails. Empower teams to move fast, but within a system that scales and governs intelligently.

That’s orchestration at its best.

And it’s why PepsiCo’s transformation is working: they’ve created a centralized platform and semantic data layer, but left room for frontline teams to innovate.

They’ve aligned decision intelligence and operations—without over-engineering it.

Stage Five: The Final Unlock: The Power of Semantic Understanding

This last interview brings me back full circle—to a blog I wrote about the "Gen AI-fication" of Analytics.

You might have picked up Bagavathi’s comment regarding "application sprawl." A lack of appropriate approach led to a situation where 80% to 90% of their reports had little to no usage.

Remember the customer who’d told me that their “users had built 20,000 dashboards” and that “18,000 of them were used by less than 8 users”? (see my breakdown of the outdated analytics supply chain Gen AI is about to disrupt here).

At PepsiCo, they’re addressing it head-on:

They’ve gone from 27,000 reports down to just 50 AI-powered analytics consoles—designed not just to inform, but to actually be used.

And that shift—toward semantic clarity—is a common thread I’ve seen in every successful enterprise, not just in the last seven months, but over the past 25 years.

Just like reading a book:

The words matter, but they’re not enough.

What matters is what you understand, what you trust, and what you do with that understanding.

So yes, the loop is now closed.

We began this journey talking about systems of action.

And we end with the realization that the most powerful driver of action isn’t intelligence alone—it’s semantic understanding.

That’s the unlock.

Understanding is what turns insight into execution.

Here is an image to help make this concept stick!

Bonus

If you don’t have time to watch the full videos, here are some key stats from the incredible leaders quoted above:

Heineken’s Ronald den Elzen (Chief Digital & Technology Officer)

400M consumers. 1M customers every single day.

Heineken's AI playbook has 5 bold moves and a few key use-cases. It starts by harmonizing ~100,000 data elements to unlock AI and a pragmatic focus on “adoption, adoption, adoption” as Ronald den Elzen, the company’s Chief Digital and Technology Officer explains it.

Their digital backbone is in fact core to a fundamental business transformation. Their 5 moves include:

Digitize the entire route to the consumer.

Establish AI and data management as a separate pillar.

Simplify and automate for end-to-end business transformation.

Create a secure cybersecurity digital backbone.

Build a digitally savvy organization.

(full video here)

PepsiCo’s Magesh Bagavathi (SVP, Head of Data, AI & Analytics)

1.4B consumers. 320,000 employees, serving 1.4 billion smiles a day and driving a 200% increase in customer loyalty.

PepsiCo's AI playbook starts with treating 60 petabytes - growing at 2X YOY and building a lean and efficient supply chain that touches over 2,400 “applications of decision making”.

Magesh Bagavathi, SVP, Global Head of Data, Analytics & AI, explains that their digital transformation is a "team sport" focused on empowering every employee. Their playbook includes:

Unified Data: Moving from 8 data lakes to one modern data lake platform with a medallion architecture.

Golden KPIs: Establishing one set of "golden reports" and data for the entire company, aiming to cut 27,000 reports down to 50 AI-powered consoles.

AI for BI: Shifting from static reports to AI-powered Business Intelligence consoles for self-service and guided insights.

Persona-Centric Tools: Rebuilding tools and capabilities to be centered on the needs of frontline workers.

Digital Backbone: Creating a centralized, governed, and managed digital platform for the entire organization to build upon.

(full video here)

USAA’s Ramnik Bajaj (SVP, CDAI Officer)

200+ AI solutions already in production. Where Agents are the “killer app” for unstructured data

Their playbook is built on a foundation of trust, member focus, and a pragmatic approach to value generation.

Their strategy for supercharging the business with AI includes:

Unstructured Data as a "First-Class" Asset: Using GenAI to unlock value from unstructured data like police reports, images, and home inspections.

A Focus on Observability: Instead of getting bogged down in "explainability," they focus on observing every AI output to ensure accuracy and reliability.

Constrained AI for Reliability: Preventing hallucinations by constraining large language models to specific, verified knowledge bases—like the 20,000 documents their frontline staff AI uses.

From Chatbots to AI Agents: Evolving from simple Q&A bots to AI agents that can access company tools and take action on behalf of members.

Small Language Models for Big Impact: Planning to use smaller, more cost-effective models like IBM's Granite for specific, controlled problems.

(full video here)

If you like this post, you'll probably also like this one: "Nobody Cares about your System of Intelligence" => https://carcast.substack.com/p/nobody-cares-about-your-system-of